A Review of Prior Research on the Acceptance Structure of Generative Search— Defining Algorithmic Legitimacy Shift (ALS) through the Integration of Supply-Side LLM-IR and Demand-Side User Behavior —

- kanna qed

- 1月24日

- 読了時間: 7分

1. Executive Synthesis: Integrating Supply and Demand Perspectives

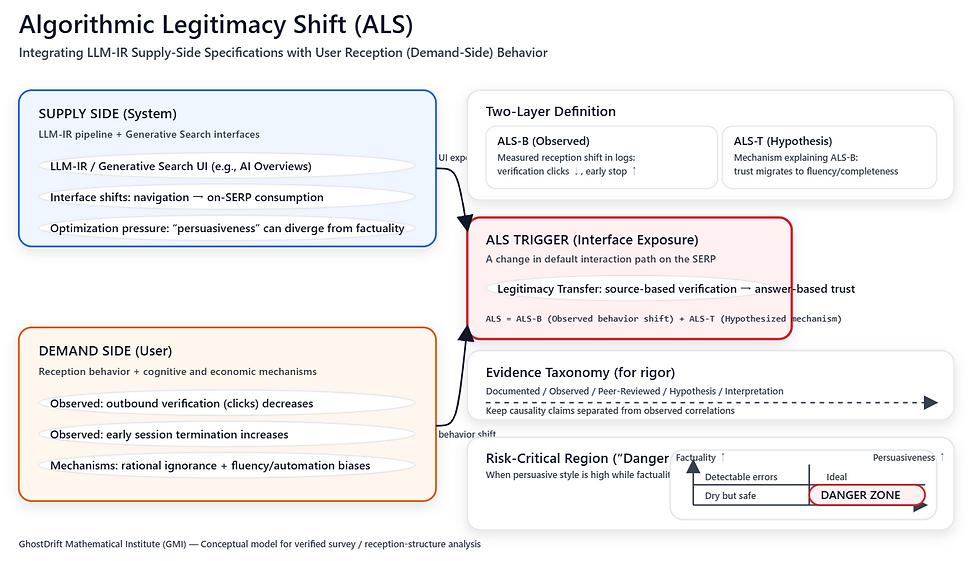

This report (Part 2) presents a structured literature review focused on the "demand-side" dynamics—specifically, user behavior and acceptance mechanisms—of generative search. While our previous report, "Literature Review on Generative Search/LLM-IR and Structural Limitations (Supply Side)," addressed the technological transformation of search systems from Neural IR to LLM-IR, this paper analyzes how these technologies are altering user cognition and behavior.

By integrating "supply" specifications with "demand" observations, we define the Algorithmic Legitimacy Shift (ALS) through two distinct layers: a behavioral layer (Observed) and a theoretical layer (Hypothesis).

Definition 1: ALS-B (Behavioral Shift / Observed) A structural change in search reception behavior where the presence of generative summaries is associated with lower outbound verification behavior (e.g., off-site clicks) and higher rates of early session termination, as observed in browsing-log data.Definition 2: ALS-T (Legitimacy Transfer / Hypothesis) A theoretical mechanism explaining ALS-B, wherein user epistemic trust shifts from "source-based validation" to the "perceived completeness, fluency, and persuasiveness of the generated answer." This shift suppresses verification through both economic incentives (rational ignorance) and cognitive biases.

1.1 Methodology

To ensure rigor, this review employs a structured synthesis approach spanning computer science, cognitive psychology, and information systems.

Data Sources: ACM Digital Library, ACL Anthology, Google Scholar, and reputable industry reports (e.g., Pew Research Center). Official documentation from search providers (Google Search Central, Perplexity Help) is used for system specifications.

Search Window: 2019–2026 (focusing on the LLM era), with exceptions for foundational theories (e.g., 1950s–1990s for automation bias and rational choice).

Keywords: "generative search," "AI Overviews click," "zero-click," "automation bias," "processing fluency truth," "LLM persuasiveness," "hallucination acceptance."

Claim Taxonomy: To distinguish evidence levels, each section applies the following taxonomy:

[Documented]: Official specifications or policy documents (e.g., Google AI features).

[Observed]: Behavioral logs or quantitative measurements (e.g., Pew data).

[Peer-Reviewed]: Findings from peer-reviewed conferences/journals (e.g., ICLR, NHB, MISQ).

[Hypothesis]: Theoretical mechanisms proposed by authors (e.g., ALS-T).

[Interpretation]: Logical inferences connecting observations to theories (causality is not claimed).

2. Generative Search & GEO: The Shift to Answer Engines

Claim Type: Documented (Official Specs) & Observed (Behavioral Data)

2.1 Interface Shift: From Navigation to Consumption

[Documented] Major providers have structurally altered the Search Engine Results Page (SERP). Google has introduced "AI Overviews" and is testing "AI-only" search modes, which present synthesized answers above traditional results [1][2][3]. Concurrently, standalone answer engines like Perplexity provide synthesized answers with citations via separate conversational interfaces [4]. [Interpretation] Unlike traditional interfaces necessitating navigation through "10 blue links," these features are designed to satisfy information needs directly on the results page. This alters the default interaction path from link-navigation to summary-consumption. (C1)

2.2 Observed Reduction in Verification Behavior

[Observed] Pew Research Center's browsing-log analysis (March 2025, U.S., n=900) provides empirical evidence of ALS-B. In visits to Google search result pages, outbound clicks to external websites occur in 8% of visits where an AI summary is present, compared to 15% without. Clicks on cited sources within the AI summary occur in only 1% of such visits. Consequently, the share of visits that ended the browsing session on the search page rises to 26% with AI summaries (vs. 16% without) [5]. (Note: "Session termination" is defined as a browser exit or inactivity for 5 seconds or more following the SERP visit. Click classification distinguishes between traditional organic links and citations embedded within AI summaries.)

[Interpretation] These observations are consistent with the hypothesis of "Structural Increase in Verification Costs." While the UI reduces the cognitive effort of searching, it effectively increases the friction required to verify primary sources, making "satisficing" the economically dominant strategy. The observed association does not identify causality; we treat it as behavioral evidence consistent with ALS-B. (C2)

Research Gap:Limitations of Prior Work: "Generative Engine Optimization (GEO)" studies [6] focus on content features that improve visibility (citation likelihood), but they do not measure how users interact with these citations.Unmeasured Variables: The probability of actual verification given the presence of a citation link: $P(verify \mid ui\_friction, perceived\_completeness)$.

3. HCI & Cognitive Science: Automation Bias and Fluency

Claim Type: Peer-Reviewed (Foundational/Cognitive) & Preprints (Emerging)

3.1 Automation Bias and the Truth Effect

[Peer-Reviewed] "Automation Bias" is the tendency to over-rely on automated aids [7]. In the context of generative AI, this is reinforced by Processing Fluency. Research in cognitive psychology confirms the "Illusory Truth Effect," where information that is easier to process (fluent, coherent) is more likely to be judged as true, independent of its accuracy [8][9]. [Interpretation] ALS-T posits that the linguistic fluency of LLMs acts as a heuristic cue for validity, reducing the cognitive trigger for verification. (C3)

3.2 Cognitive Offloading and Critical Thinking

[Preprint/Suggestive] Emerging studies, such as Kos'myna et al. (2025), suggest that AI-assisted tasks may be associated with lower self-reported ownership of output and altered neural markers (EEG) [10]. [Interpretation] While this evidence is preliminary (non-peer-reviewed), it aligns with the concept of "cognitive offloading," where critical evaluation is outsourced to the system. Note: We treat this as suggestive auxiliary evidence.

3.3 The ELIZA Effect and Social Acceptance

[Peer-Reviewed] The "ELIZA Effect" [11] describes users attributing understanding to computer programs. [Hypothesis] We hypothesize that modern LLMs reactivate this as "Social Automation Bias," where conversational tones lower skepticism.

4. IR & NLP Evaluation: The Divergence of Factuality and Persuasiveness

Claim Type: Peer-Reviewed (Evaluation Metrics & Psychology)

4.1 Persuasiveness as an Independent Objective

[Peer-Reviewed] Research accepted at ICLR 2025 [12] and Nature Human Behaviour [13] demonstrates that "persuasiveness" is a distinct property from "factuality." Formally, the optimization objective for an LLM can be modeled as maximizing a persuasion score $S_{persuasion}(response, user)$, which is not constrained by a factuality score $S_{factuality}(response, ground\_truth)$. [Result] Salvi et al. (2025) provide empirical evidence that GPT-4 achieves higher conversational persuasiveness than humans in controlled debates, particularly when arguments are personalized [13]. (C4)

4.2 The "Danger Zone" (Risk-Critical Region)

[Proposed Framework] We define the "Risk-Critical Region" (Danger Zone) as the quadrant of Low Factuality × High Persuasiveness.

Low Persuasiveness | High Persuasiveness | |

High Factuality | Safe but Dry | Ideal State |

Low Factuality | Detected Error | Danger Zone (ALS Risk) |

Existing metrics like G-Eval [14] assess output quality but do not model user gullibility specifically within this Danger Zone.

5. Economic Theory: Rational Ignorance and Stopping Rules

Claim Type: Theoretical Application (Classical Theory)

5.1 Stopping Rules in Generative Environments

[Peer-Reviewed] Browne et al. (2007) established "Stopping Rules" for information search [15]. Under Information Foraging Theory [16], users maximize the rate of gaining value per cost unit. [Theoretical Application] If the perceived cost of verification (opening a tab) exceeds the expected gain, users rationally choose Rational Ignorance (Downs, 1957 [17]), opting for Satisficing (Simon, 1955 [18]). (C5)

6. Experimental Design Proposal (Addressing Research Gaps)

To validate ALS, we propose the following specific experimental designs.

Exp 1: Impact of Visual Completeness on Verification (Testing ALS-B)

Independent Variable (IV): Visual Completeness of the AI summary (Low: short text / High: structured, long text with rich formatting).

Dependent Variable (DV): Outbound Click-Through Rate (CTR), Time to Session Termination (Pew definition).

Identification: Randomized A/B testing on live traffic.

Hypothesis: Higher visual completeness causes lower outbound CTR (Negative correlation).

Exp 2: Persuasion vs. Factuality in the Danger Zone (Testing ALS-T)

IV: Factuality (True/False) $\times$ Persuasiveness (Low/High; manipulated via tone/length).

DV: User Acceptance Rate (Detection Error).

Identification: Controlled user study with $2 \times 2$ factorial design.

Analysis: Logit model with clustered standard errors at the user level.

7. Comprehensive View of Algorithmic Legitimacy Shift (ALS) and Conclusion

Claim Type: Conceptual Synthesis (The Unified Model)

This review integrates supply-side specifications and demand-side observations to establish the ALS Model.

Established [Observed/Documented]: The interface shift to generative answers is associated with a measurable decline in verification clicks and earlier session termination (Pew; C1, C2).

Supported [Peer-Reviewed]: LLMs can be optimized for persuasiveness independently of factuality, and linguistic fluency enhances truth judgments (ICLR, NHB, Psych; C3, C4).

Hypothesized [ALS-T]: These factors facilitate a legitimacy transfer where "rational ignorance" and "fluency heuristics" suppress verification in the Danger Zone (C5).

Conclusion: ALS is a socio-technical shift. Future interventions must redesign the "economics of verification" within the UI to counteract the rationality of ignorance.

References

[Official / Documented]

Google. (2024). "Generative AI in Search: Let Google do the searching for you." Google The Keyword Blog.

Google. (2024). "AI Overviews: About AI features in Search." Google Search Central.

Google. (2025). "AI Mode in Search: Experiments and Details." Google Search Help.

Perplexity. (2025). "Citations and Sources." Perplexity Help Center.

[Observed / Behavioral Data] 5. Pew Research Center. (2025). "About 1 in 5 Google search queries now lead to AI Overviews." Pew Short Reads. (Methodology: Log-based analysis, March 2025).

[Peer-Reviewed / Classical Theory] 6. Aggarwal, P., et al. (2024). "GEO: Generative Engine Optimization." Proceedings of KDD '24. https://doi.org/10.1145/3637528.3671900 7. Parasuraman, R., & Riley, V. (1997). "Humans and Automation." Human Factors. 8. Reber, R., & Schwarz, N. (1999). "Effects of Perceptual Fluency on Judgments of Truth." Consciousness and Cognition. 9. Unkelbach, C. (2007). "The unintuitive origin of fluent truth." Journal of Experimental Psychology. 10. Kos'myna, N., et al. (2025). "Your Brain on ChatGPT." Project Website / arXiv Preprint. (Note: Suggestive evidence). 11. Weizenbaum, J. (1966). "ELIZA." Communications of the ACM. 12. Singh, S., et al. (2025). "Measuring and Improving Persuasiveness of Large Language Models." ICLR 2025. 13. Salvi, F., Horta Ribeiro, M., Gallotti, R., & West, R. (2025). "On the conversational persuasiveness of GPT-4." Nature Human Behaviour. https://doi.org/10.1038/s41562-025-02194-6 14. Liu, Y., et al. (2023). "G-Eval." EMNLP 2023. 15. Browne, G. J., et al. (2007). "Cognitive Stopping Rules." MIS Quarterly. 16. Pirolli, P., & Card, S. (1999). "Information Foraging." Psychological Review. 17. Downs, A. (1957). An Economic Theory of Democracy. Harper. 18. Simon, H. A. (1955). "A Behavioral Model of Rational Choice." The Quarterly Journal of Economics.

Appendix A: Evidence Matrix (Audit Grade)

Claim ID | Claim (Atomic) | Claim Class | Source | Dependent Variable | What it does NOT show |

C1 | Interface shift to consumption | Documented | [1][2][3] | N/A (UI Spec) | User preference |

C2 | Summaries associate w/ low clicks | Observed | [5] | Share of visits, Termination | Causal "why" (satisfaction vs. giving up) |

C3 | Fluency increases truth judgment | Peer-Reviewed | [8][9] | Truth Rating | Effect specific to LLM-generated text |

C4 | LLMs optimized for persuasion | Peer-Reviewed | [12][13] | Win-rate in debates | Acceptance of false info specifically |

C5 | Rational non-verification | Theory | [17][18] | N/A (Model) | Empirical threshold for verification cost |

コメント