The Day GD-Attention Was "Registered as a Concept" by Google

- kanna qed

- 2025年12月10日

- 読了時間: 6分

-- The meaning of the GhostDrift Mathematical Institute appearing on the world's AI map --

0. Conclusion (TL;DR)

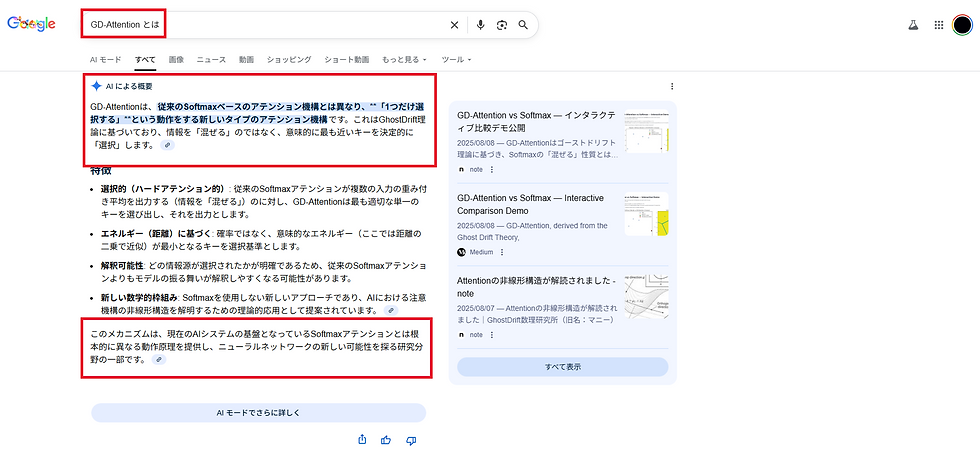

When searching for "What is GD-Attention," Google's AI Overview now displays a definition of GD-Attention.

This is not merely a search hit, but implies that a unique concept node called "GD-Attention" has been established within Google's World Model.

The key point is that it is correctly understood as a new framework based on "energy (distance)" that "selects only one," distinguishing it from the Softmax mechanism.

A new theory born from a private institute has been registered as a "concept to be explained" by the AI of the world's largest search engine--this is clearly an outlier-class milestone for the GhostDrift Mathematical Institute.

1. What Happened

First, let us organize the facts.

When you search for "What is GD-Attention" in a browser, an "AI Overview" by Google appears at the top of the search results.

There, the following content is written:

- "Unlike conventional Softmax-based attention mechanisms, this is a new type of attention mechanism that operates to 'select only one'."

- "It does not 'mix' information but deterministically 'selects' the semantically closest key."

- "It provides an operating principle fundamentally different from Softmax attention."

On the right sidebar, Japanese articles from Note, English articles from Medium, and existing comparison demo articles are bundled and displayed as representative articles explaining GD-Attention.

What is important here is that GD-Attention is "defined" as an "AI Overview."

This is on a different dimension than simply "GhostDrift articles appearing high in search results." Google's AI model has begun to treat GD-Attention as a single independent concept.

2. What is Google's "AI Overview"?

The "AI Overview" often seen on Google search screens recently is a feature where AI automatically writes summaries and definitions about a certain theme.

Usually, it operates for:

- Historical mathematical concepts or physical laws

- Famous algorithms

- Existing universities or research institutions

- Somewhat established fields and topics

In other words, only subjects that Google's World Model judges as "stable concepts that should be explained to search users" get this "AI Overview attached."

Ordinary blog posts or temporary buzzwords do not become subjects of AI Overview, even if their search ranking is high.

The point this time is that GD-Attention has entered that slot.

3. Why is this "Outlier-Class" Amazing?

3-1. A New Theory from a Private Individual Registered as a "Concept"

Most subjects appearing in AI Overviews are "heavy players" such as:

- Large-scale universities

- Famous research institutions (DeepMind, OpenAI, FAIR, etc.)

- Theories and concepts used for decades

Alongside them lines up GD-Attention, a new theory from the GhostDrift Mathematical Institute launched by an individual.

In terms of a map, this is close to "a formal place name being attached to a point that did not yet have a name."

It means a dot has been plotted inside Google's World Model in the form of:

Node: GD-Attention

Label: New Attention Mechanism

3-2. Correctly Understood as a "Softmax Alternative Paradigm"

Reading the text of the AI Overview, keywords such as the following appear:

- "Different from conventional Softmax basis"

- "Hard attention-like operation selecting only one"

- "New framework based on energy (distance)"

This matches exactly with the points GhostDrift has emphasized as the essence of GD-Attention.

In contrast to Softmax which "mixes" information, GD-Attention "jumps" to the semantically most logical key.

The highlight this time is that Google's AI model explains this after properly grasping the difference.

3-3. GhostDrift Articles Bundled as "Primary Sources"

Looking at the right sidebar, the Japanese Note article, English Medium article, and GD-Attention vs Softmax comparison demo are displayed as a set of "related content."

This means Google recognizes GhostDrift articles as the "Primary Source" indicating "if you want to know about GD-Attention in detail, read this."

It is not simply that "some page got a hit," but a link structure is being formed:

Concept Node: GD-Attention

Official Document Candidates: GhostDrift Articles

4. What This Means for the GhostDrift Mathematical Institute

4-1. Proof of Existence for the Institute Itself

Previously, when searching for "GhostDrift Mathematical Institute" on Google, an explanation of the institute began appearing in the AI Overview.

Now, with the addition of the theoretical-level concept of "GD-Attention," both the "Institute (Organization)" and the "Theory (GD-Attention)" hold positions within Google's World Model.

It can be said that this is a state where both the "Signboard" and the "Masterpiece" have started to appear on the world map.

4-2. A Jump in Credibility for Research, PR, and Joint Research

From the perspective of researchers, students, and companies, "whether it is a theory that properly appears as a concept when looked up on Google" is a significant factor for psychological reassurance.

In future scenarios such as:

- Lectures, study groups, proposals for joint research

- PoC (Proof of Concept) with companies

- Introductions to students and young researchers

Simply adding the phrase, "If you search 'What is GD-Attention' on Google, the AI will explain it properly," will serve as material to raise credibility by one level.

4-3. Meaning as a Theory Originating from Japan and a Small Team

GD-Attention is a theory that rose not from a large corporation, but from the GhostDrift Mathematical Institute, a small, private initiative originating in Japan.

The fact that this theory has been registered as a "concept to be explained" by the AI of the world's largest search engine in both English and Japanese spheres has a symbolic meaning:

- Even a small institute can be put on the world map.

- New theories can be recognized from Web dissemination, not just papers.

5. Where GD-Attention Fits in the GhostDrift Grand Vision

While GD-Attention is sufficiently interesting on its own, the GhostDrift Mathematical Institute positions it as part of a larger vision.

The rough layer structure is as follows:

Lower Layer: Finite Closure OS / Yukawa Kernel / Semantic Energy

- Mathematical core that cuts out infinity as a finite energy landscape.

Middle Layer: GD-Attention (Semantic Jump Mechanism)

- Mechanism that uniquely decides "where to jump" along the semantic energy.

Upper Layer: Semantic Generation OS / Legal-ADIC / Energy-OS, etc.

- A group of OSs for using semantic jumps and finite closure in domains such as law, energy, accounting, and culture.

What was recognized by Google this time corresponds to the "Middle Layer"--one of the important stones supporting the center of the tower.

From here on, by gradually opening connections to the "Upper Layer OS Group" (Semantic Generation OS, Legal-ADIC) and the "Lower Layer Finite Closure Kernel" (Yukawa / UWP / ADIC Certificates), we intend to create a "pathway for people to know the whole of GhostDrift with GD-Attention as the entrance."

6. What We Want to Do From Here

To ensure this "Google AI Overview listing" does not end as a one-time topic, here are a few things the GhostDrift Mathematical Institute wants to do in the future.

- Maintenance of GD-Attention Explanatory Articles for English Speakers

Update existing Medium articles to the latest versions, including the connection with the Google AI Overview.

- Brushing Up Interactive Comparison Demos

Add UI/visualization that allows for a more intuitive understanding of the differences between Softmax vs GD-Attention.

- Explaining Connections with Semantic Generation OS / Finite Closure OS

A series of articles briefly introducing the World Model and OS concepts that lie beyond "just GD-Attention."

- Open Recruitment for Study Groups, Lectures, and Joint Research

Creating a window to connect with people who want to "try GD-Attention with actual models" or "try energy attention with data in their own field."

7. Summary -- A Small Dot Placed on the Map

The sentence that appeared on Google's search screen, "GD-Attention is distinct from conventional Softmax-based attention mechanisms..." is not merely an explanatory text.

It is a visible sign that the theory called GD-Attention, proposed by the GhostDrift Mathematical Institute, has been carved as a single "dot" inside Google's World Model.

How far this dot will expand into lines or planes in the future is not yet decided at all.

However, one thing can be said:

The name "GD-Attention" will not easily disappear from the world's AI map anymore.

If you have read this far and feel you want to:

- Try GD-Attention in your own field

- Combine it with Semantic Generation OS / Finite Closure OS

- Hear a little more technical discussion

We would be happy if you took a look at other articles and demos from the GhostDrift Mathematical Institute.

We hope to expand this "dot," which started from a small institute, little by little together with you.

コメント